Next.js vs TanStack

Kyle Gill, Software Engineer, Particl

See this post for inspiration.

Over the past few months, I’ve moved as much code as possible away from Next.js. While I see why people are attracted to it and its growing ecosystem, I am no longer sipping the KoolAid. TanStack, while not perfect either, provides better abstractions that are more than adequate for the vast majority of projects.

In this post, I am going to share my personal opinions about the two projects as they relate to making web applications.

What is Next.js good at?

Before I discuss its shortcomings, I want to share where I think Next.js excels by sharing my recent experience with it.

At Particl, we took a bet on where the ecosystem was moving for our web properties. Vercel had hired up many of the React core team (around 25% of it), and was hiring open source maintainers aggressively. It looked like Next.js would see continuing support and would feature the most integrations with the latest packages.

For that reason, we chose Next.js to build our web app, as well as our website (when we moved off of Webflow).

Everything OOTB

Getting set up was not difficult, adding support for most package was a breeze, and there were integration guides for everything from MUI, to Markdoc, as well as more enterprise stuff like DataDog real-user monitoring.

The speed with which you can get setup out of the box is great, and other ancillary tools often just work™️ with it. If you want Jest, someone from the Next.js team has already merged a native plugin that gets things configured semi-magically.

The marketing lingo labels Next.js “The React Framework for the Web”, and it’s definitely one of the easiest ways to get a React app up and running out there.

High-scale optimization

Next.js is also great at the really high scale use cases, with the ability to surgically tweak the rendering pattern of individual pages across your app. You don’t have to inflate your build times to render millions of pages, you can render them on demand with server-side rendering (SSR). Authenticated routes can stay client-side (CSR) if you want.

You can shave off milliseconds with advanced APIs like partial pre-rendering (PPR), edge functions, and caching. Other packages like streaming and selective hydration can make your app feel snappier and precisely isolate performance bottlenecks. For an ecommerce site, every micro-optimization like this can sell more products.

There are Next.js specific APIs like incremental static regeneration (ISR) that make it easier to build high-traffic content that doesn’t need to be refreshed super frequently (and without extra builds).

Also included is nice out of the box behavior for things like code splitting, which does feel like it should be done for you.

The list of extra APIs is pretty long.

3 letter acronyms are overkill for most apps

The problem with the 3 letter acronyms and the extra APIs, is that most of them are optimizations I don’t really need?… In addition to feeling unnecessary for smaller scale, they are also financially hard to justify, and difficult to grasp.

Scale

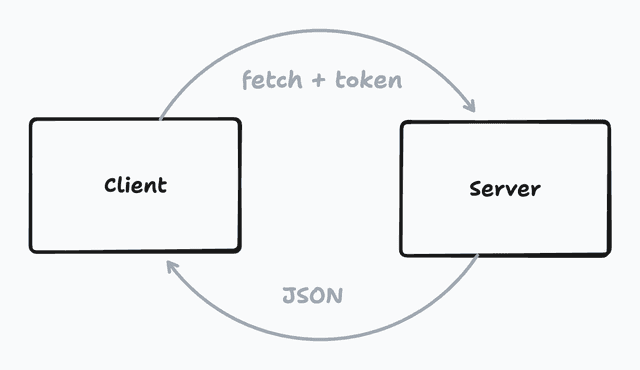

For some use cases, I can see the justification for micro-performance improvements. For small to mid-sized startups I really don’t need much more than a server and a client. I’m okay with a couple hundred extra milliseconds while we find product-market fit.

But the users! How dare you!

Our customers buy our software because it solves their problem.

Our customers want their problem solved in seconds, instead of days or weeks. At our size, saving an extra 100ms is a premature optimization.

Financial incentives

Because incentives rule everything around me, I understand the marketing dollars get poured into selling cloud software. That leads to a lot of these things feeling like you need them, but are they really?

Each is almost inevitably tied to a service sold:

- Edge compute (

middleware.ts) - Image transformation (

next/image) - ISR reads/writes (

export const revalidate = 60) - Serverless function execution (

app/api/route.ts)

Financially, Vercel feels incentivized to push their own services (of course), they are a business after all. However, by buying into the APIs, it becomes increasingly difficult to justify the cost of switching. Perhaps the build output API makes things easier to run across providers, though I haven’t ever been one to try it or self-hosting myself.

My financial incentives push me to spend as few dollars as possible on my code, which is why I consider this is my current optimal architecture for B2B SaaS:

Difficult new concepts

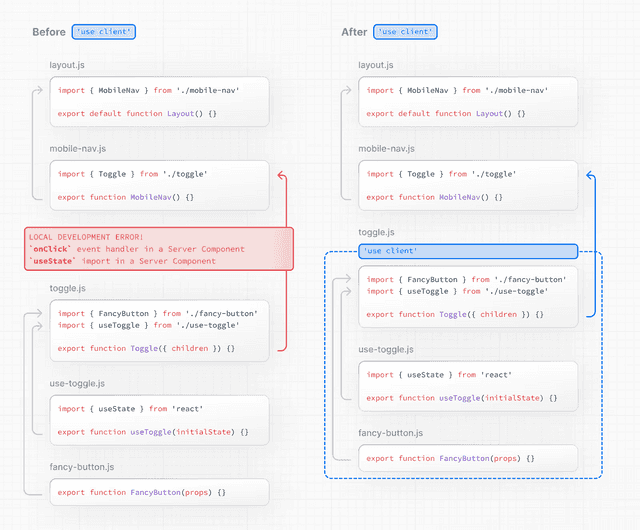

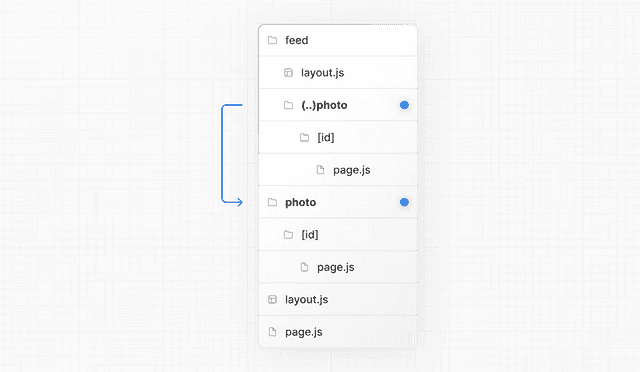

The app router is riddled with footguns and new APIs, unrelated to React, but sometimes blurring the line with it. It’s hard to know when Next.js begins, and React ends.

The docs are full of graphics that are beautiful to look at, but are included to try and explain advanced concepts not easily grasped by a simpler mental model.

I have spent years building web apps across jQuery, Ruby on Rails, and React in its many flavors, but I still can’t figure out how to do things in Next.js without consulting the cryptic tomes:

I can’t ⌘+Space to search through available APIs for magic file based conventions.

I’m unable to understand the path that my code follows when I apply a magic route directive like: export const experimental_ppr = true.

In general, I find the learning curve to be steep, and the mental model to be difficult to grasp.

Debugging is a nightmare

If this was just another Vite plugin or normal fs sorcery, debugging errors wouldn’t be that big of a deal. Errors would be traceable/debuggable to their source through normal stack traces. However, because of all of the abstraction required to function, debugging is a nightmare for me (and I know a rather unfortunate amount about ASTs, bundlers, and the web).

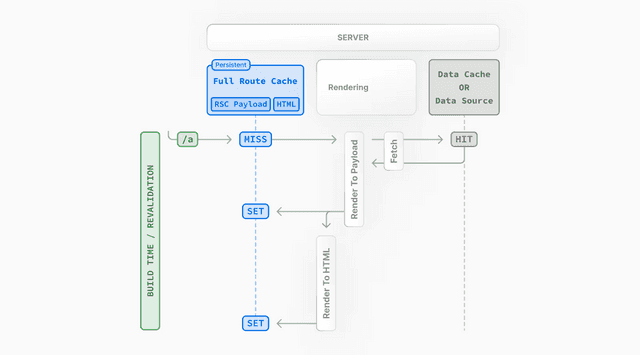

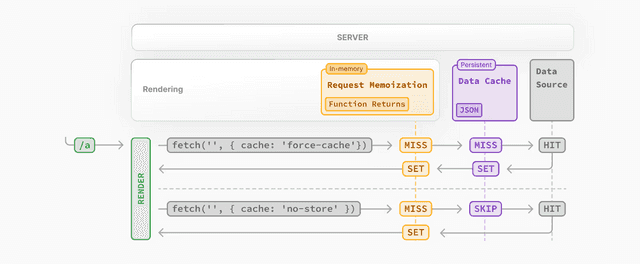

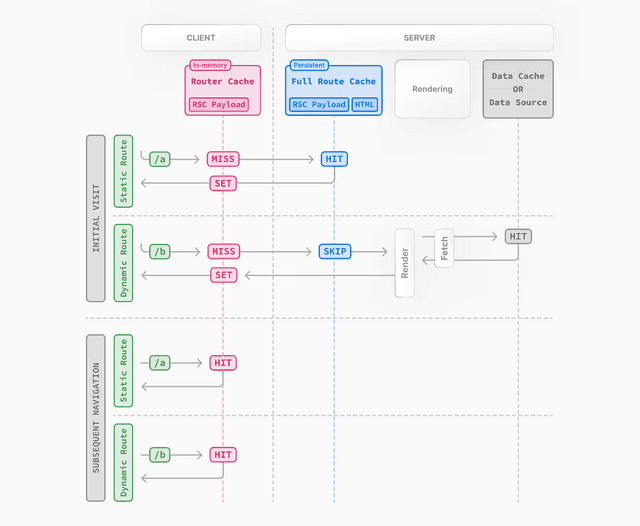

Not to mention there are 3 separate caches!

- Full Route Cache

- Data Cache

- Router Cache

Each one more difficult to dig into than the last. If I put Redis in front of my app, at least I can query it. Heaven forbid I look up the data stored in one of these.

Many of these APIs are still marked as unstable or experimental, so criticism may not be totally fair.

The browser also has a built-in cache, but for whatever reason doesn’t make the cut here.

SPAs are an afterthought

Next.js offers a single doc on building SPAs with the app router, though most docs instead encourage you to embrace their server-side APIs, and assumes a fullstack JavaScript stack.

Super valuable features to building great software like typesafe routing are left as experimental PoC’s in GitHub branches while new paradigms are pushed instead.

At it’s worst, the dev server was taking our app 2 minutes to compile. At it’s best, we were lucky to be around 3-4 seconds per page or full refresh.

Working to try and ugprade to Turbopack I was met with (still) slow dev server compilation times, and a lack of support for older packages we hadn’t upgraded. After some hacking, we got things running and it helped, but it still didn’t hold a candle to Vite’s performance of the same code that can load in milliseconds.

Turbopack itself favors the app directory, and lacks implementation of some optimizations for the pages directory. I imagine they’ll get there someday, but when you have 2 codebases to maintain, it makes sense that one has to be the 2nd priority.

TanStack + Vite is simple and elegant

You really don’t need to much. You can deploy anywhere, get code that compiles fast, and is a delight to work with.

TanStack Router gives me auto-complete on any page in my app, including search params that are validated by Zod. I feel like what is happening is exposed and plain:

import { createFileRoute } from '@tanstack/react-router'const postSchema = z.object({readOffset: z.number(),})export const Route = createFileRoute('/posts/$postId')({// In a loaderloader: ({ params }) => fetchPost(params.postId),// Or in a componentcomponent: PostComponent,// Search params decoded, and validatedvalidateSearch: postSchema,})function PostComponent() {// In a component!const { postId } = Route.useParams()const { readOffset } = Route.useSearch()return <div>Post ID: {postId}</div>}

TanStack Query gives me a global storage of server-side state, that is easy to reason about and debug.

const { isPending, error, data } = useQuery({queryKey: ['repoData'],queryFn: () =>fetch('https://api.github.com/repos/TanStack/query').then((res) =>res.json(),),})

Each of these have their own built-in dev tools (imagine that)!

When I need them, more advanced APIs are available and they always feel like an extension of the current API, on one of these plain functions.

TanStack doesn’t make money when I deploy with its APIs, and I feel like I can grok the entire API surface.

Then there’s Vite, it’s fast, gets out of the way, and just works. It’s most of the good parts of Next.js, without the baggage. There is a growing ecosystem and no company selling it as a cloud service (yet).

I’m done with Next.js

I still love React, and I still love a lot of Vercel’s offering (and recommend it), but I just can’t keep up with Next.js. At the end of the day, I don’t see enough value in the added complexity and the increasingly complicated API surface.

When the day comes where I reach the hyperscale necessary to shave off those extra milliseconds, maybe I’ll be back to look around.

If you like Next.js, and it works for you and your team. That’s awesome. I’m happy for you. To me, TanStack feels like the right abstraction.